Artificial Intelligence is rapidly transforming how businesses operate. From marketing automation to HR decisions, AI tools are becoming part of daily operations. But with new technology comes new legal responsibility. For corporate legal departments, especially in-house counsel, understanding how AI fits into the business—and how to manage its risks—has become a top priority.

In-house counsel is not only protecting companies from lawsuits and non-compliance but also shaping internal policies that ensure AI is used fairly, legally, and transparently. This post explores 5 essential questions in-house counsel are asking about AI today—and why these questions matter more than ever.

1. What Are the Legal Risks of Using AI in Business Operations?

One of the first concerns raised by in-house counsel is the legal risk associated with AI tools. Unlike traditional software, AI systems are capable of learning from data and making decisions without human intervention. It adds a layer of unpredictability, which can increase the chance of unintended consequences.

The key legal risks often include:

- Liability for harm caused by AI decisions

- Lack of transparency in how AI makes decisions

- Unclear responsibility when AI tools malfunction or behave unexpectedly

Legal departments are reviewing contracts and internal practices to address these risks. Most are asking vendors to include clauses that explain how AI decisions are made and what support is offered in case of disputes or errors. They are also seeking internal documentation of AI decisions. It includes audit logs, model explanations, and risk assessments that can be used if a legal issue arises.

2. Are Complying with Existing and Emerging AI Regulations?

The global regulatory landscape around artificial intelligence is growing quickly. The European Union’s AI Act, for example, introduces tiered levels of risk for AI systems and strict rules for high-risk use cases like employment, finance, or healthcare.

In-house legal teams are closely monitoring regulatory developments, especially in:

- The European Union (EU AI Act)

- The United States (state-level laws like California’s CCPA and emerging federal AI frameworks)

- Canada, the UK, and other regions developing AI-related legislation

Compliance in this area isn’t static. What is acceptable today may not be tomorrow. In-house counsel is working with compliance officers and department heads to classify AI tools by risk and develop internal protocols for high-risk systems. Some companies are even creating AI registries—internal lists of all AI tools being used—so they can monitor updates and apply legal reviews on time.

3. How to Ensure the Use of AI Is Fair and Free From Bias?

One of the most sensitive concerns around AI is its potential to make biased or discriminatory decisions. AI systems learn from data—and if the data is biased, the outcomes will be too.

In-house counsel are especially cautious when AI is used in areas such as:

- Recruitment and hiring

- Credit scoring or loan approvals

- Customer service automation

- Legal and compliance monitoring

The legal risks are tied to anti-discrimination laws, employment rights, and consumer protection. If an AI system rejects job applicants based on biased training data or provides different experiences to different groups of customers, the company could face lawsuits and reputation loss.

To reduce this risk, in-house counsel are working on the following:

- Implementing fairness audits for high-impact AI tools

- Creating AI ethics review boards that include legal, technical, and HR experts

- Requiring human review in all final decisions made by AI

Bias in AI is not just a tech issue—it’s a legal and ethical one. Ensuring fairness helps businesses stay out of court and keep public trust.

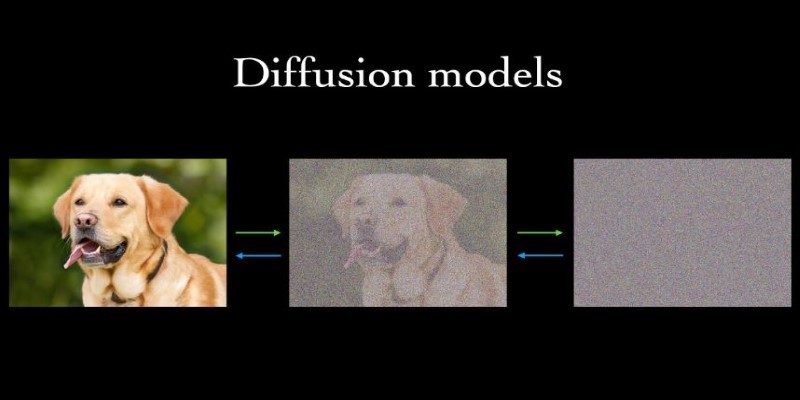

4. Who Owns the Output Created by AI?

As more departments start using AI to generate reports, code, marketing materials, or even legal documents, a new question emerges: Who owns the content? This issue becomes complex when content is generated entirely by AI tools. In many countries, current copyright laws don’t grant protection to works created without human involvement. That raises questions about:

- Whether AI-generated content can be copyrighted

- Whether businesses can claim exclusive rights to content created using third-party AI tools

- Whether AI accidentally copies or reproduces existing copyrighted material

In-house legal teams are reviewing content creation processes to make sure that:

- Human review is involved in the final content

- Usage rights are clearly defined in AI software agreements

- Internal teams avoid fully automating creative work without proper oversight

Some are even including new clauses in contracts to address the use of generative AI and intellectual property rights.

5. Do You Have Adequate Internal Controls for AI Usage?

Finally, corporate legal departments are asking whether the organization has the right internal structure to manage AI effectively. As employees across departments start experimenting with tools like ChatGPT, Copilot, and Midjourney, the lack of internal control can lead to risky behavior.

In-house counsel wants answers to these internal policy questions:

- Is there a clear AI usage policy across all teams?

- Are employees trained on safe and legal use of AI tools?

- Is someone responsible for monitoring AI usage and risks?

Some legal departments are now helping set up AI governance committees or task forces that oversee how AI is adopted across the organization. These teams are responsible for:

- Setting guidelines for ethical AI use

- Reviewing third-party AI tool usage before approval

- Logging and reviewing AI-generated content or decisions

They also recommend regular training programs for employees to help them understand where the legal lines are—and how to stay within them.

Conclusion

AI is transforming business operations, but it brings serious legal, ethical, and compliance challenges. In-house counsel are playing a key role in identifying and managing these risks. From data bias to content ownership, their questions help organizations stay proactive and protected. Legal teams are now deeply involved in setting internal AI policies and ensuring regulatory compliance. Their oversight ensures AI is used responsibly and aligns with the company’s values. By addressing concerns early, businesses can unlock AI’s benefits without legal fallout. Ultimately, legal guidance is essential for safe and sustainable AI adoption.