Language is messy, unpredictable, and full of hidden meanings. Yet, artificial intelligence is getting better at understanding it, thanks to powerful NLP techniques like the Masked Language Model (MLM). Unlike older models that could only linearly process words, MLMs predict missing words by analyzing the full context, much like how humans infer meaning from incomplete sentences.

This breakthrough has revolutionized search engines, chatbots, and translation tools. But how do these models actually work? What makes them so effective? To truly appreciate their impact, we need to uncover the mechanics behind MLMs and their role in modern AI.

How Does The Masked Language Model Work?

Unlike traditional language models that predict the next word in a sentence, a Masked Language Model (MLM) functions by randomly masking words within a sentence and then training an AI to predict them. This unique approach enables machines to grasp both preceding and following words simultaneously, leading to bidirectional learning—a critical advancement over previous sequence-based NLP algorithms.

For instance, consider the sentence:

"She placed the [MASK] on the table before leaving."

A traditional model may only look at the words up to the point "[MASK]" to guess the missing word. An MLM, through bidirectional learning, looks at both left and right context, allowing it to make more informed and context-sensitive guesses. Words like "book" or "cup" may be strong possibilities here based on wider patterns in language.

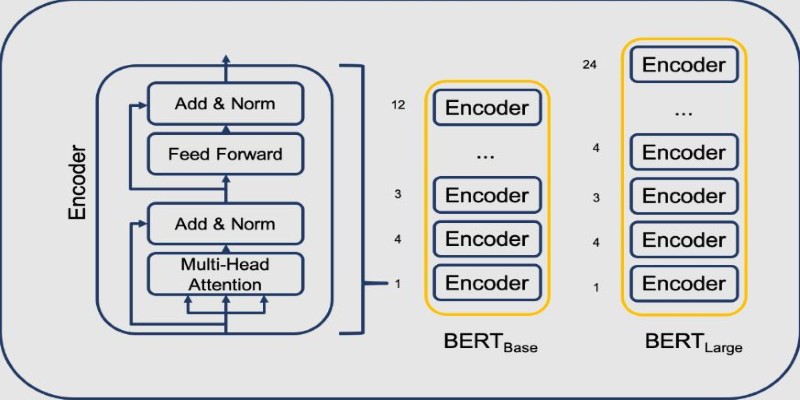

Contextual language processing utilized by MLMs provides them with a unique advantage over earlier models, including RNNs, which are majorly based on sequential data processing. This process drives sophisticated models like BERT (Bidirectional Encoder Representations from Transformers) and sets the benchmark for AI applications based on NLP.

The Role of MLMs in NLP Advancements

Masked Language Models (MLMs) serve as the foundation for many cutting-edge NLP models, most notably BERT (Bidirectional Encoder Representations from Transformers). Before BERT, NLP algorithms heavily relied on recurrent neural networks (RNNs) and sequence-based models, which struggled with long-range dependencies. The introduction of bidirectional learning in MLMs marked a breakthrough, enabling AI to process words in both directions simultaneously and significantly enhancing language comprehension.

A key strength of MLMs is their ability to manage polysemy, where a word carries multiple meanings based on context. Consider the word "bank." The sentence "She went to the bank to withdraw cash" refers to a financial institution, whereas "He sat by the river bank" denotes a landform. Traditional models often misinterpret such nuances, but MLMs excel at disambiguation, ensuring accurate language understanding.

Beyond word-level analysis, MLMs contribute to sentence-level comprehension, improving search engine accuracy, machine translations, and AI-driven writing assistants. Their ability to predict masked words enhances grammar, syntax, and idiom recognition, making them integral to modern AI applications. As NLP algorithms evolve, MLMs continue to play a crucial role in bridging the gap between human language and artificial intelligence, shaping the future of AI-powered communication.

Challenges and Ethical Considerations

Despite their advanced capabilities, Masked Language Models (MLMs) come with several challenges and ethical concerns. One of the most significant issues is bias in training data. Since MLMs learn from large-scale text datasets, they can inadvertently absorb and reinforce societal biases present in the data. If these biases go unchecked, they can lead to unfair or discriminatory outputs, particularly in applications like automated hiring systems, customer service AI, and legal decision-making tools.

Another major hurdle is the high computational cost associated with training powerful MLMs. Developing state-of-the-art NLP algorithms requires enormous processing power and large-scale datasets, making it difficult for smaller organizations to compete with tech giants. Additionally, MLMs do not truly understand language but rely on statistical associations. This can lead to plausible but incorrect outputs, where a model predicts a masked word based on frequency rather than factual accuracy, sometimes producing misleading or biased results.

To mitigate these challenges, researchers are developing techniques to reduce bias, improve model interpretability, and enhance energy efficiency. Methods such as fine-tuning curated datasets and embedding ethical AI principles are helping improve fairness. However, as NLP technology advances, ensuring responsible AI development will remain a crucial focus in making these models more transparent, fair, and reliable.

The Future of Masked Language Models

Masked Language Models are set to shape the future of NLP, driving AI's ability to understand and generate human-like text. Advancements will focus on improving efficiency, reducing bias, and enhancing transparency in AI decision-making. Researchers are also working on multilingual models capable of processing multiple languages seamlessly, expanding their usefulness across diverse applications.

The influence of MLMs is already evident in industries such as healthcare, education, and customer service. AI-driven tools like chatbots, translation systems, and content recommendations leverage MLMs to deliver more accurate and context-aware responses. As technology progresses, these models will become more refined, making machine-human interactions smoother and more intuitive.

Despite challenges like ethical concerns and computational demands, continued research aims to make MLMs more reliable and responsible. Their evolving role in shaping NLP remains pivotal, ensuring AI-powered systems better understand and engage with human language in meaningful and contextually relevant ways. The future of NLP is undoubtedly tied to the rapid advancement of MLMs and their integration into various real-world applications.

Conclusion

The Masked Language Model has transformed how NLP algorithms interpret human language, enabling AI to process words with greater context and accuracy. By leveraging bidirectional learning and contextual language processing, MLMs have improved search engines, translations, and AI-driven writing assistants. However, challenges like bias and high computational demands remain hurdles to widespread adoption. As advancements continue, future models will be more efficient, ethical, and multilingual. The Masked Language Model is a foundational technology shaping AI’s evolution, ensuring that machines understand language with increasing precision, ultimately enhancing the way humans interact with artificial intelligence.