Long Short-Term Memory (LSTM) neural networks have become a cornerstone in the world of artificial intelligence, particularly when it comes to processing data that unfolds over time. Imagine trying to understand a sentence, predict stock prices, or transcribe speech—all tasks that require not only analyzing the present but also recalling past events. Traditional neural networks struggle with this, but LSTMs excel by remembering relevant information and ignoring what’s unnecessary.

This ability to retain context across extended sequences has rendered LSTMs a necessary tool in domains such as natural language processing, prediction, and even medicine. Let's discuss how LSTMs operate and why they're important.

Understanding LSTM Neural Networks

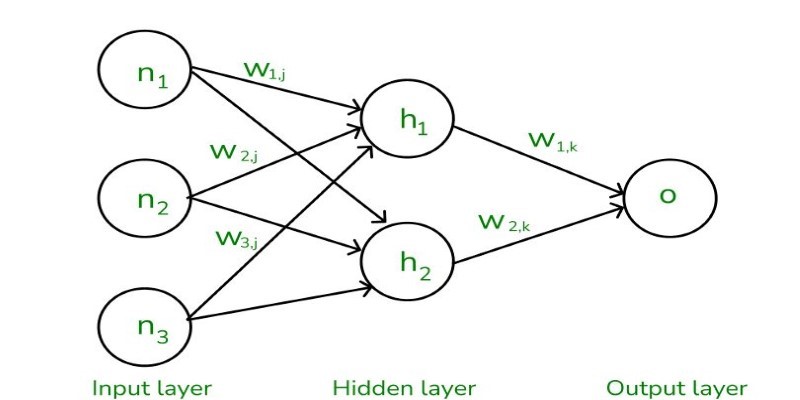

LSTMs belong to the family of recurrent neural networks but with a key difference—memory control. In a traditional RNN, information flows through hidden layers, allowing previous outputs to influence future ones. However, as the sequence length increases, standard RNNs struggle with what is known as the vanishing gradient problem. This phenomenon causes older inputs to have little to no impact on later computations, limiting the ability of RNNs to process long-term dependencies.

LSTMs address this by adding a unique memory cell structure that maintains information for very long periods. A single LSTM unit has three gates:

Forget Gate: This gate decides what information from the past should be discarded. It analyzes the previous hidden state and the current input, determining which data is no longer relevant.

Input Gate: This gate regulates what new information should be stored in the cell state. It prevents the network from being overwhelmed by unnecessary details.

Output Gate: Controls what part of the stored memory should be passed on to influence the next step in the sequence.

By carefully managing which details are kept, updated, or removed, LSTMs overcome the limitations of traditional RNNs, ensuring the preservation of long-term dependencies.

Key Applications of LSTMs in AI

LSTM neural networks have revolutionized multiple industries by improving the ability of AI to understand and predict sequential data. Their flexible memory structure makes them useful in a variety of applications, including:

Natural Language Processing (NLP)

LSTMs are widely used in natural language processing, enabling AI to understand words in context over long sequences. Traditional RNNs often struggle with retaining information, leading to errors in tasks like machine translation, chatbots, and text summarization. LSTMs maintain sentence structure and meaning by remembering earlier words, enhancing the accuracy of translations and responses. This ability to retain past context is essential for producing coherent and accurate results in text-based applications.

Speech Recognition

In speech recognition, LSTMs help AI systems process spoken words in the correct order, allowing for accurate responses. Systems like Siri and Google Assistant rely on sequential memory to understand entire sentences spoken seconds apart. Traditional models without memory retention often produce incoherent results. LSTMs improve this by ensuring past information is preserved, allowing for more accurate and context-aware voice recognition, making them a key component in voice-based applications and transcription services.

Time-Series Forecasting

LSTMs excel in time-series forecasting by detecting patterns in historical data to predict future trends. In areas like stock market analysis, weather forecasting, and business demand predictions, LSTMs can identify subtle shifts that traditional models may miss. Their ability to store long-term dependencies enables them to capture complex time-dependent relationships, making them invaluable in fields where even small data changes can significantly impact decisions, such as finance and market analysis.

Healthcare and Medical Diagnosis

LSTMs are increasingly used in healthcare to analyze patient data over time, improving diagnosis and disease prediction. By considering past symptoms, test results, and treatments, LSTMs assist in chronic disease management and medical imaging. For example, in ECG analysis, they monitor heart rate variations, detecting potential anomalies early. This ability to track changes over time helps healthcare professionals make more informed decisions and identify conditions before they escalate, potentially saving lives.

Challenges and Limitations of LSTMs

Despite their many advantages, LSTM neural networks are not without challenges.

High Computational Costs

One of the biggest drawbacks of LSTMs is their computational complexity. Because they require multiple gating mechanisms and memory cell updates, LSTMs demand more processing power than standard neural networks. This makes training large-scale LSTM models time-consuming, especially when working with massive datasets.

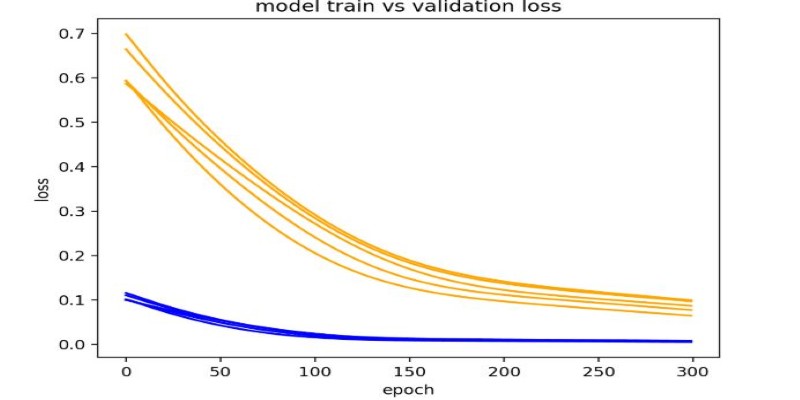

Overfitting Issues

LSTMs can sometimes memorize training data too well, leading to poor generalization when faced with new inputs. This issue, known as overfitting, can be mitigated using techniques like dropout regularization, which randomly removes some connections during training to prevent excessive reliance on specific data points.

Hyperparameter Sensitivity

Tuning an LSTM network requires careful adjustment of hyperparameters such as learning rate, hidden units, and sequence length. These parameters can significantly affect an LSTM model's performance, making optimization a complex task that often requires trial and error.

The Future of LSTMs in AI

While LSTMs remain a powerful tool for handling sequential data, newer architectures are emerging that address their limitations. Transformers, for example, have gained popularity in natural language processing due to their ability to process entire sequences in parallel rather than step-by-step, like LSTMs. This has led to faster and more efficient models, such as OpenAI's GPT and Google's BERT.

However, LSTMs continue to be relevant in areas where sequential memory is essential, particularly in time-series forecasting, speech processing, and anomaly detection. Researchers are also working on hybrid models that combine the strengths of LSTMs with newer deep-learning techniques to improve performance while reducing computational costs.

Conclusion

LSTM neural networks have revolutionized AI by enabling the processing of sequential data with long-term dependencies. Their unique memory mechanism, controlled by forgetting, input, and output gates, makes them invaluable in tasks like language processing, speech recognition, and time-series forecasting. While they face challenges such as high computational costs and overfitting, LSTMs remain essential in many AI applications. As deep learning evolves, LSTMs will continue to play a crucial role in shaping intelligent systems capable of complex, context-aware tasks.